One of the things that’s become quite obvious, in the various anti-vax comments that I’ve followed and responded to on line, is that people with ‘alt’ views have very firm ideas on what constitutes ‘the truth’. And it’s not something that mainstream organisations, authorities, or scienceA are seen as offering.

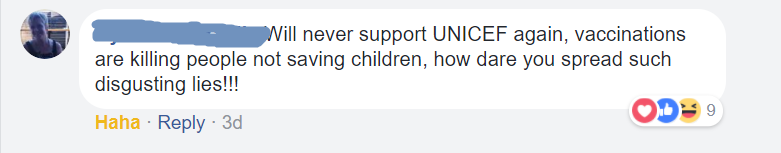

And so (on a new UNICEF New Zealand post) we see:

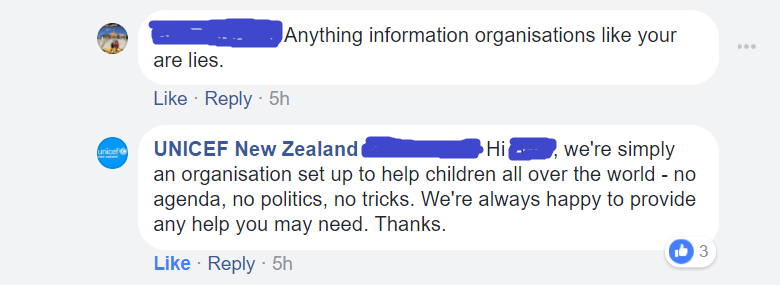

Blue chimes in (you get the gist),

and really doesn’t think UNICEF New Zealand is telling the truth.

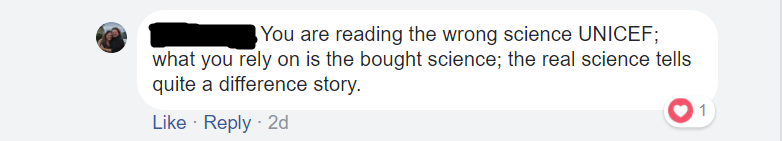

And then there’s Black, with her accusation of shills and ‘paid’ science. We’ve met Black before.

And yet these claims are so far from what all the data tell us (that vaccines are really rather safe, that they’ve saved probably millions of lives and avoided or reduced a lot of suffering), that you have to wonder, why? Why are these three individuals, and many others like them, so ready not only to write off modern evidence-based medicine, but to believe the pronouncements of The Health Ranger, Joseph Mercola, Andrew Wakefield, Russell Blaylock, and others like them? There’s been quite a lot written on this lately, as it’s a question that concerns doctors, scientists, educators, and science educators alike.

Part of the problem probably lies with the ease with which ‘fake news’ spreads these days. A study just published in Science (Vosoughi, Roy & Aral, 2018) looked at 126,000 ‘rumour cascades’ (the retweeting of ideas & rumours) on Twitter, and found that fake news is more likely to go viral:

Falsehood diffused significantly farther, faster, deeper, and more broadly than the truth in all categories of information

although fake political news travelled fastest & furthest, and

false news was more novel than true news, which suggests that people were more likely to share novel information. Whereas false stories inspired fear, disgust, and surprise in replies, true stories inspired anticipation, sadness, joy, & trust.

The story is also discussed here at The Atlantic.

It’s not all down to bots, either: apparently Twitter bots spread true & false tales even-handedly (if bots have hands!); it’s the propensity of people to spread the false stuff preferentially that’s the problem. (I’ve seen this in my own FB circle, with some friends being quite uncritical in sharing rumours that a moment’s quick fact-checking show to be untrue.) So why these differences? What is it about human judgement that sees falsehoods spread so fast?

Part of the answer may lie in the architecture & connectivity of our brains. This article at The Genetic Literacy Project notes that

Some people, especially if they are young, will back away from strong beliefs if exposed to the scientific perspective over a long time period. But in some instances, people hold even tighter to their beliefs, when challenged with facts. It’s a baffling phenomenon, yet science has come a long way in understanding the underlying brain functions.

Apparently

certain brain structures … are less active during rational thinking, and more active while a person maintains irrational beliefs in the face of counter-evidence.

Because these parts of the brain are aslo involved in stress responses, It’s been speculated that

the tendency to hold tightly to beliefs in the face of counter-evidence may be the result of a kind of stress response … [one that uses a particular neural pathway] to process signals from what the emotional mind perceives as threatening.

This is similar to the ideas discussed here at Debunking Denialism, where it’s suggested that some people may believe in conspiracy theories because this helps them make sense of an uncertain & changing world, plus such theories

provide psychologically satisfying answers to ambiguities and allows people to have a comforting, yet faulty, sense of certainty in the face of a lack of information.

Unfortunately these theoriesB also insulate believers from information contradicting their beliefs, allowing them to see others providing that opposing evidence as bought & paid for (see Black above). And the fact that conspiracy theories often generate quite strong, negative, emotions may help explain their spread, as suggested by Vosoughi, Roy, & Aral (2018).

Another reason why belief in ideas and concepts that run counter to everything science tells us about the world is discussed by Simon Oxenham on the British Psychological Society’s Research Digest page. Writing about a recently-published paper, he asks

Could it be the case that knowing that most people doubt a conspiracy actually makes believing it more appealing, by fostering in the believer a sense of being somehow special?

Oxenham describes how the researchers found that if an individual believed in one conspiracy theory (eg that fluoridation is harmful) they were more likely to believe in others (eg that vaccination is also harmful, or that humans have nothing to do with global climate change) – something that Orac has described as ‘crank magnetism’. Those surveyed were also asked to rate their own ‘need to feel unique’, and it seems that this was also correlated with someone’s agreement with conspiracy theories – particularly if they believed that a particular theory was a minority opinion.

Now, as anyone will know who’s read the comments threads on any post or FB article about vaccination or fluoridation, these beliefs can be incredibly difficult to change. And yet it’s necessary to try, because these beliefs can also be dangerous if they gain wide currency: witness the outbreaks of measles in Europe and in some US states, due to uptake of the idea that vaccines are harmful. Oxenham discusses the findings of two other studies (the originals are here and here):

popular conspiracy theories may be best dealt with through early education that debunks dangerous conspiracy beliefs before they have the opportunity to take hold in the wild

And that’s because these ideas can be very difficulty to counter once they’ve become established in someone’s mind.

It’s a big, & daunting, task.

A and yes, I know! To paraphrase Indiana Jones: [science] is the search for facts, not truth.

B really , hypotheses

S.Vosoughi, D.Roy, & S.Aral (2018) The spread of true and false news online. Science 359 (6380): 1146-1151. doi: 10.1126/science.aap9559